build business tools with GPT3

tl;dr: Use GPT-3 to automate data collection, enrichment, and standardization

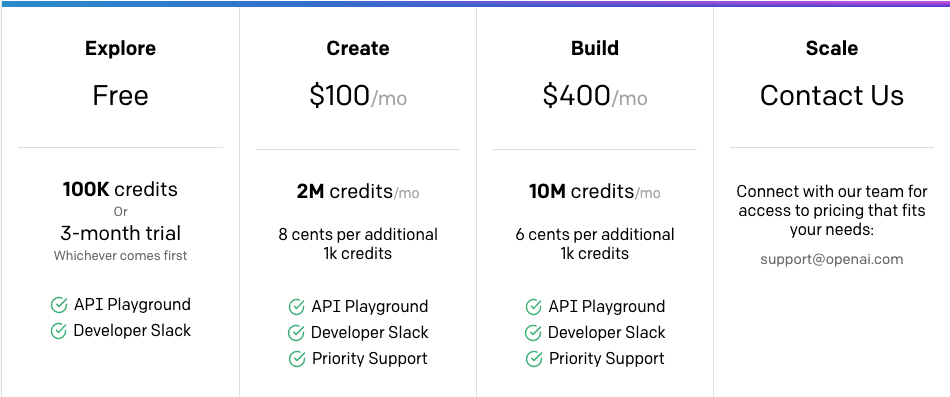

cost: $0 to start; up to $400/mo at scale

time: 30 minutes

table of contents:

background

The company OpenAI recently released access to their GPT-3 natural language model, an iteration on the previous GPT-2.

GPT-2 could do some cool stuff with text generation (minimaxir lead the charge on developing on top of it), but didn't have many other use cases

You may have seen some of the extremely exciting GPT-3 demos. Lots of use cases have been built in one form or another (here and here)

(If not, click through those threads, it is truly indistinguishable from magic)

Many were proofs of concept that showed fascinating possibilities or gave an easy hello-world template to start with.

But we Business People have Objectives and also Key Results we're beholden to. So let's wrangle this generational technology into something boring, manageable, and valuable.

Today we'll walk through a couple examples I productized. Feel free to fork, clone, commercialize, whatever. Just send me a check if it makes you crazy rich 🙃.

A note before we start: the API is still in 'limited private beta', so you must apply for access

(If you are curious about how the tech works, start here)

pricing

As of October 1st, 2020, GPT-3 API access is no longer free for unlimited use.

Instead, they have introduced a rather confusing pricing model based on tokens and credits.

You are effectively billed on the sum of characters included in your prompt and returned in response (here's their pricing page)

A couple quick notes:

- The older GPT models (

curie,babbage,ada) are significantly (10-75x) 'cheaper' in quota usage - You can limit the number of characters returned by the API with the

max_tokensparameter - Tokens do not roll over, so you pay a min of $100 or $400/mo, and more if you overrun the tier quota. Make sure you have a cronjob to exhaust the quota at end of month to get maximum value.

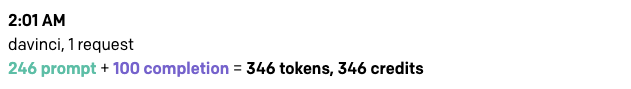

Additionally, you can check the token/credit usage of past calls in the OpenAI dashboard

I walk through costs in an example usage scenario in the business implications section.

auth

Once you've applied for and have been granted access, you will get a publishable clientside key and a Secret key (prefaced with pk- and sk-, respectively)

The secret key uses a standard Bearer Token auth in HTTP POSTs:

curl https://api.openai.com/v1/engines/davinci/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer sk-yourspecificsecretkeygoeshere" \

-d '{"prompt": "This is a test", "max_tokens": 5}'

The following Python examples will use my gpt_utils file, which is forked from the wonderful gpt-sandbox repo

from gpt_utils import set_openai_key

set_openai_key(os.getenv("GPT_KEY"))

prompts

You feed data to the GPT model via an API endpoint. I will only be covering serverside/local implementations; there are good guides elsewhere on setting up GPT clientside.

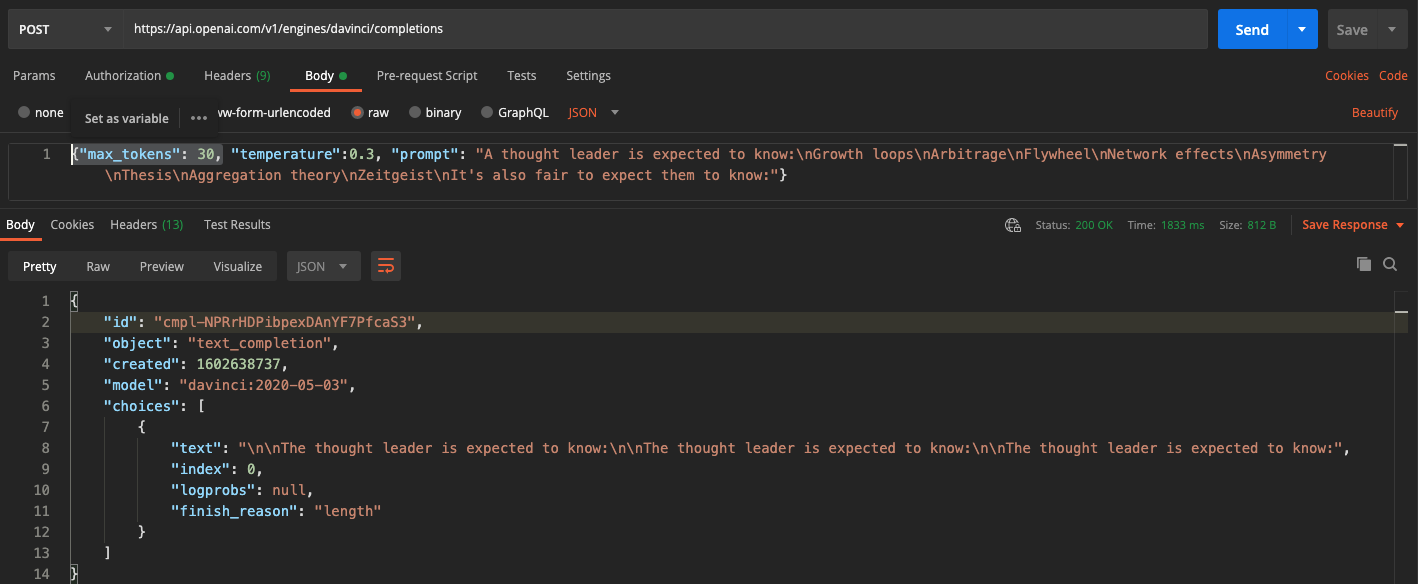

A simple example would be a tweet from a bit ago.

I thought up some things a "Thought Leader" should know and fed those examples plus a leading question into the model.

A thought leader is expected to know: Growth loops, Arbitrage, Flywheel, Network effects, Asymmetry , Thesis, Aggregation theory, Zeitgeist. It's also fair to expect them to know:

It returned more suggestions:

>> Metcalfe's law, Conjoint analysis, Revenue management, Lifetime value, And they should be able to explain:, The network effect, S-curve, Tipping point, Scalability, Awareness, Value proposition

This response is understandably a little messy. I ended up running the query a few times, manually curating the best responses, and posting them.

Some responses were truly nonsensical.

In those cases, changing the prompt, temperature, and max_tokens can help improve the output.

For some use cases, the multiple calls -> aggregate -> human curate cycle will most likely return the most value.

classification

Imagine you have a bunch of (and you want to):

- plain text customer support tickets (see if they match the product roadmap)

- potential prospects (lead score them)

- customer reviews (count the frequency of various value props)

You can do so very quickly with GPT-3.

That said, GPT-3 Classification quality will depend heavily on how much time and energy is spent cleaning data and standardizing categories.

classification implementation

You can use CLI prompts like inquirer to randomly sample a dataset, manually categories a few rows, and feed them as inputs to GPT-3.

from gpt_utils import Example, GPT # local

import inquirer

import openai

def prompt_classification(response_type)

question_bank = {

"tags": "What categories does this fit in? Separate by commas",

}

answers = inquirer.prompt([inquirer.Text(response_type, message=question_bank[response_type])])

return answers[response_type.lower()]

for sample_text in random_ten_entries:

tags = prompt_classification("tags")

gpt.add_example(Example(input_data[n]['description'], tags))

Alternately, you can upload pre-labeled example rows from CSV or elsewhere and load them as Examples.

Then, you simply iterate over your input rows and write the output somewhere:

for n, row in enumerate(input_data):

gpt_result = gpt.submit_request(input_data[n]['description'])

input_data[n]["GPT-Tags"] = gpt_result.get("choices", [])[0].get("text", "").replace("output: ", "")

classification results

As an example (repo here), I ran 1300 Twitter accounts I follow through GPT-3, with 8 random rows labeled with 14 unique tags.

In response, I got:

- 749 unique tag combinations and 318 unique tags generated

- 99% of outputs had at least one tag

- 168 tags had only 1 instance

- 247 tags had 5 or less instances

- the most prevalent tag (

founder) was applied to 24% of total inputs - I manually reviewed tags with 6 or more instances; I removed 10 of 72 for being duplicates (e.g. eng and engineer)

- of the top 14 tags, 10 were input tags, 3 were derivations of inputs (e.g. engineer=eng), and 1 was novel

Based on my classification testing, I highly recommend you drop the low-frequency tags and use fuzzy matching to merge similar tags.

(You can use fuzzy-matching repo to do so via CLI)

summarization

Imagine you have a bunch of (and you want to):

- sales call transcripts (track value prop changes over time)

- technical blog posts (you need new-hires to understand)

- newspaper/newsletter articles (you want to quickly review)

There are some good examples here. Of particular import is the ability to modify the complexity of the response with a phrase like:

My eigth grader asked me what this passage means:

Combined with the max_tokens parameter, you can cleanly control the complexity and length of the summarized output you want.

summarization implementation

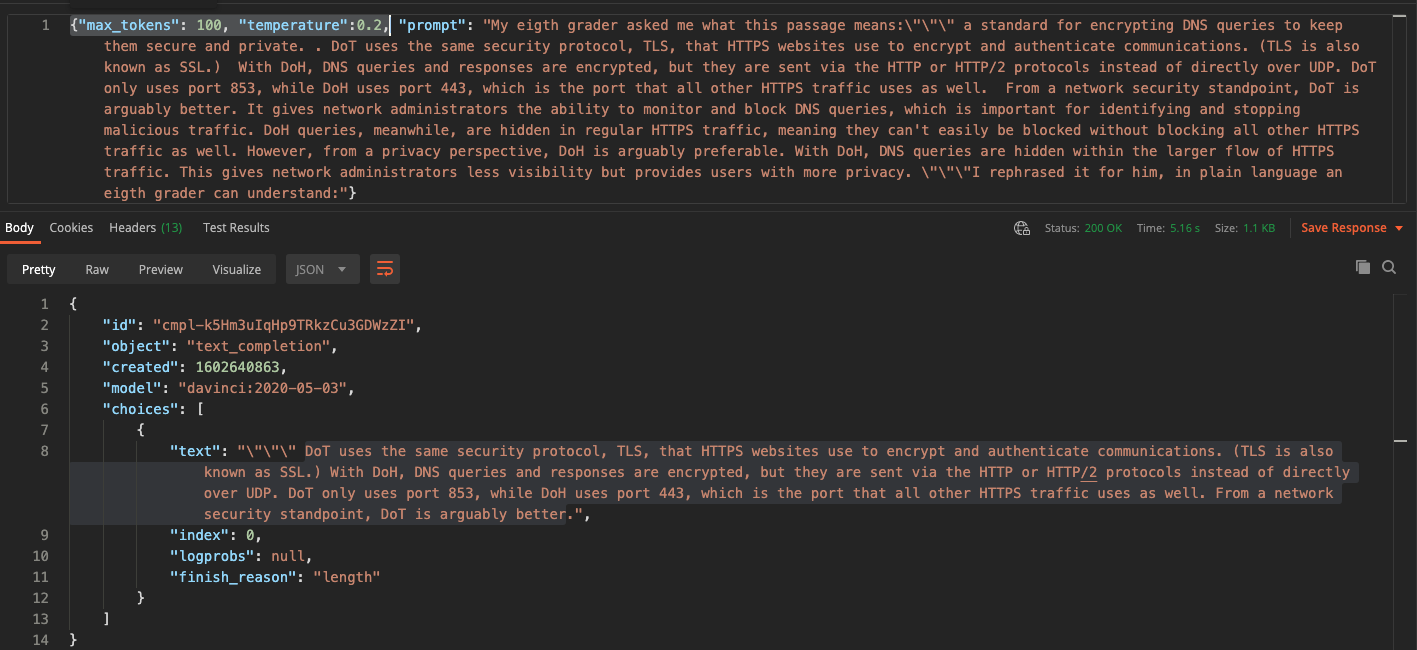

In the following example, a paragraph on DNS over TLS vs DNS over HTTPS is summarized to 1/3rd its original length:

The takeaways from the content are more or less preserved. As with other use cases, human curation (and a low temperature value) will be important to ensure inappropriate outputs are not used.

synthesis

Imagine you have a bunch of (and you want to):

- ad copy (make more ad copy)

- words (find the definitions of)

- sparsely populated CRM prospects (enrich with founding year)

synthesis implementation

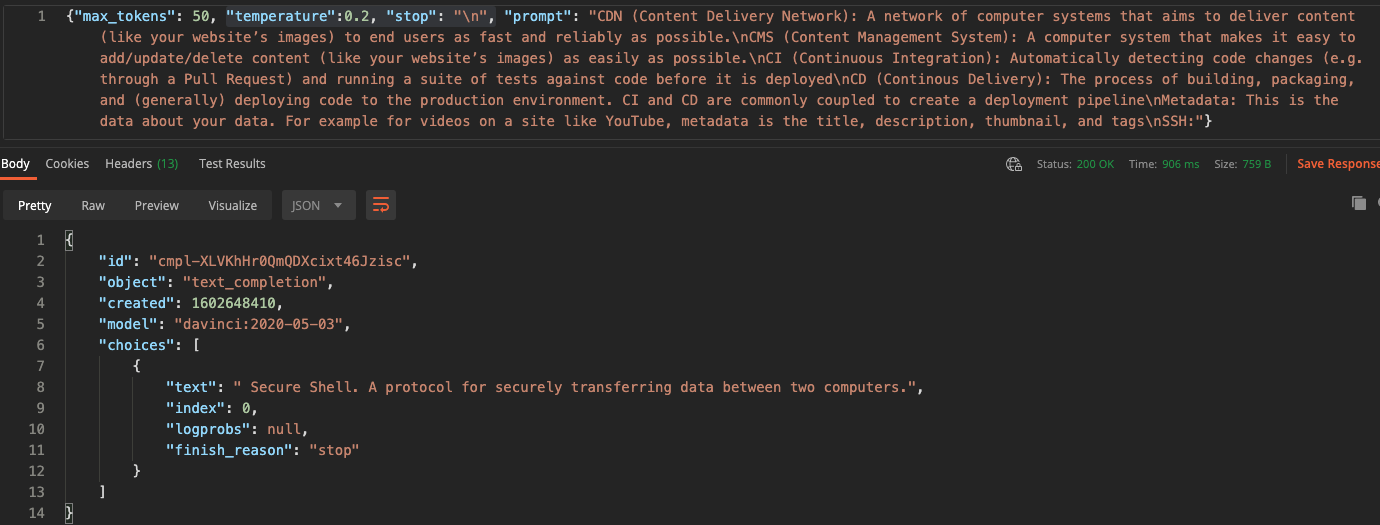

Let's say you're writing a glossary (like I am).

Sometimes you come across words you know you need to add, but you don't have a good definition handy.

With this repo, you can generate definitions in the same style and length as previous definitions instantly.

(you can also use the previous summarization method to distill existing longform content about that glossary word)

Here's an example of synthesizing a definition with other definitions in Postman:

You'll notice the use of the stop parameter; it returns everything before that character (in this case, the newline character \n). This avoids GPT-3 attempting to replicate the pattern from the input examples and returning unwanted data.

enrichment implementation

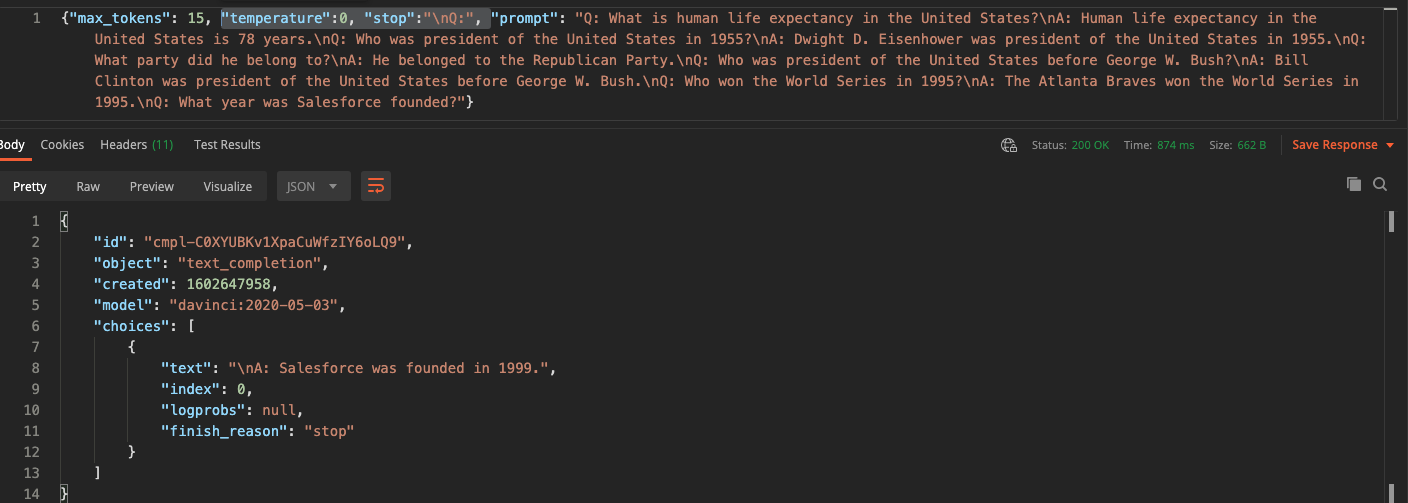

Let's examine the CRM use case. Perhaps you want to only sell your product to startups, so you decide to filter your CRM prospects by founding year.

However, when you go to filter, you find most prospect records are missing data in that field.

GPT can synthesize the founding year of a company, as well as other trivia facts.

Do note that the accuracy of the response is heavily dependant upon how unique and well known a company is.

You'll also see several other synthetic query prompts in that overall prompt. There are quite a few opportunities in this area if you're willing to tinker with the prompt.

other

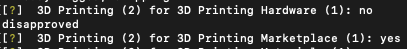

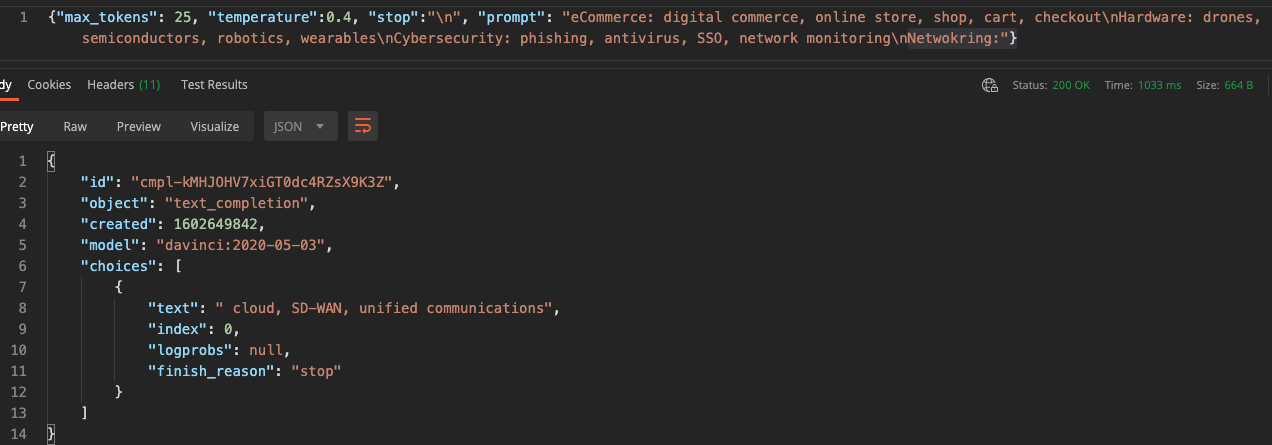

One more use case that ties in with the above Classification section is category standardization.

Imagine you have a series of company categories:

- landing page builders

- heatmapping

- image compression

- and so on

You want to roll these up to a parent category, CRO SaaS, such that it will be applied any time each of those tags is applied.

GPT-3 can generate child tags from parent tags and vice versa, allowing you to quickly make a category tag lookup source of truth:

Interestingly here, I misspelled networking in the prompt, and GPT-3 still returned an accurate response.

business implications

If you're thinking about building a business on top of GPT-3, I generally encourage you not to.

There are a few good overviews of why that business would be indefensible and expensive here and here.

Both were written before pricing became live. The consideration of # of characters in the input meaningfully worsens the economics for certain use cases (like categorizing lots of free text).

That all said, GPT-3 can add significant value to an existing company with a strong understanding of its unit economics.

To model out an example of using GPT-3 to seed most of the content in a blog post (but still be human edited):

- average blog post has 1700 words, 8,000 characters

- you make an average of 10 API calls of 1,500 characters each to seed content for a new blog post

- total costs: $0.19

- your content marketer is $80,000/year and writes 2 blog posts/week

- if GPT-3 data makes them 30% more efficient, you 'save' $24,000/year for $25.31 worth of credits

- the ROI is (probably) even higher in consideration of the revenue generated by leads from those posts

Here's the Github links again, all in one place:

- Github: GPT-Create-Glossary-Definitions

- Github: GPT-Categorize-Twitter-Users

- Github: Find-Similar-Tags

- Github: gpt-sandbox

- Github: GPT-2-Simple

Thanks for reading. Questions or comments? 👉🏻 alec@contextify.io