how to scrape

tl;dr: Using Python requests and BeautifulSoup libraries to scrape data from most data sources

cost: $0

build time: 30 minutes (MVP) / 120 minutes (cleaner v2)

BeautifulSoup allows you to navigate through the raw HTML pulled down from a website (in JS, this is called 'Walking the DOM'). Generally, you’ll want structured information from one or more particular HTML elements, and to throw away the rest of the data.

To get started scraping a particular page, you'll need to

- Check that it's accessible with Python requests (some sites detect and block non-human traffic)

- Find the relevant HTML selectors

- Write a quick script to pull the web page and print the selectors

- Debug, clean the generated data, and clean up your code

- (Optional) productionize your scraping code

#1 - check scrapability

Here's a quick and easy script to check. You will want to use a proxy if you intend to make multiple requests.

from bs4 import BeautifulSoup, element, NavigableString

import requests

def request_site(url):

# Spoof a typical browser header. HTTP Headers are case-insensitive.

headers = {

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.100 Safari/537.36",

"referer": "www.google.com",

"accept-encoding": "gzip, deflate, br",

"accept-language": "en-US,en;q=0.9",

"accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8",

"cache-control": "no-cache",

"upgrade-insecure-requests": "1",

"DNT": "1",

}

response = requests.get(url, headers=headers)

if response.status_code not in [200, 202, 301, 302]:

print(f"Failed request; status code is: {response.status_code}")

return None

parsed = BeautifulSoup(response.content, "html.parser")

print(parsed)

return parsed

#2 - find HTML selectors

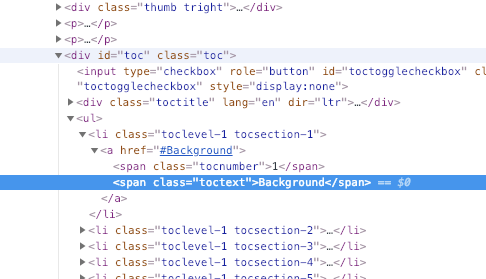

Visit the site in question in your browser and open up the DevTools console. In the below example, I'm using a Wikipedia page.

In this case, we learn that the element we selected (dark blue) is a span with class “toctext”

However, to get the all 14 contents of that list, you need to get every instance of that selector by requesting the containing element (light blue) - a div with id “toc”

#3 - get that data

To get the contained span elements from parsed, all you need is

from bs4 import BeautifulSoup, element, NavigableString

import requests

response = requests.get("https://en.wikipedia.org/wiki/Outline_of_science")

parsed = BeautifulSoup(response.content, 'html.parser')

containing_div = parsed.find("div", {"id": "toc"})

all_toctext_spans = containing_div.find_all("span", {"class": "toctext"})

for toctext_span in all_toctext_spans:

print(toctext_span.get_text())

This will print a list of each Contents item.

Most structured data scraping will generate a columnar data structure. I recommend writing each row to a dictionary and appending those dictionaries into a list of dictionaries (example provided in the repo below)

#4 - cleaning

Resources for extracting, cleaning, and speeding up your build time can be found in this Mastering BeautifulSoup guide.

# 5 - productizing

For a full-stack implementation, visit this Github Repo.

Thanks for reading. Questions or comments? 👉🏻 alec@contextify.io