set up Google Sheets APIs (and treat Sheets like a database)

tl;dr: set up programmatic reads and writes from any Google Sheet you have access to

cost: $0

build time: 15 minutes (MVP)

Let's start with a hot take to get the blood boiling.

Google Sheets is (probably) the most important database your company uses.

Why?

Every single employee can (and does) use it.

For most rapidly growing companies, this means valuable information gets siloed into Sheets. Teams and individuals inadvertently make those Sheets into sources of truth. Worst still, whole workflows will be built around using, cleaning, and manipulating that siloed data.

Below, I'll walk through setting up the auth, programmatic reads and writes (with Python), advanced operations like unique-appends, and data syncing for Google Sheets. If you're looking to write automations for users of Sheets, I recommend you check out my article on AppScript, the in-Sheets Javascript platform.

- auth setup

- reads

- writes

- databasey stuff

#1 - auth setup

#1.1 - go to the Google API Console

You might be surprised that this exists if you don't use GCP. I was too. It's relatively comprehensive.

Make sure you're signed into the account you want the auth tied to (and not accidentally your personal account)

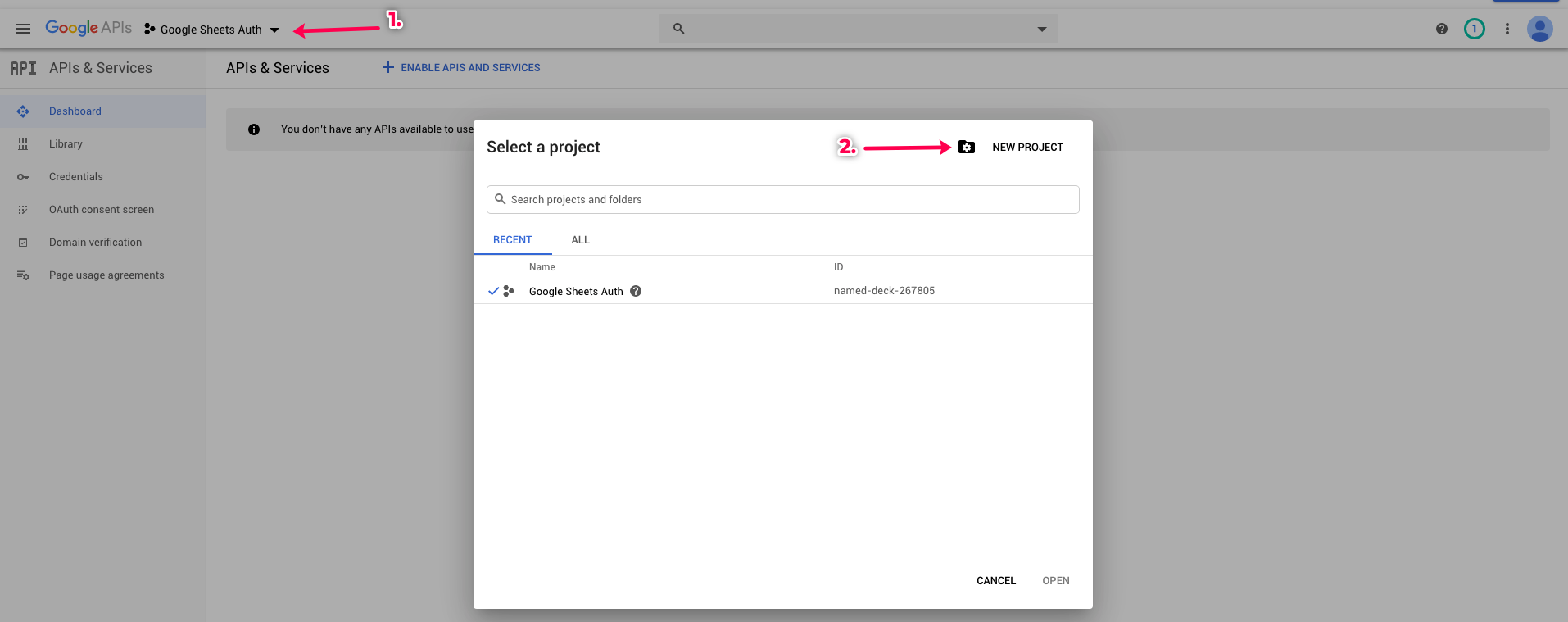

#1.2 - create a new project

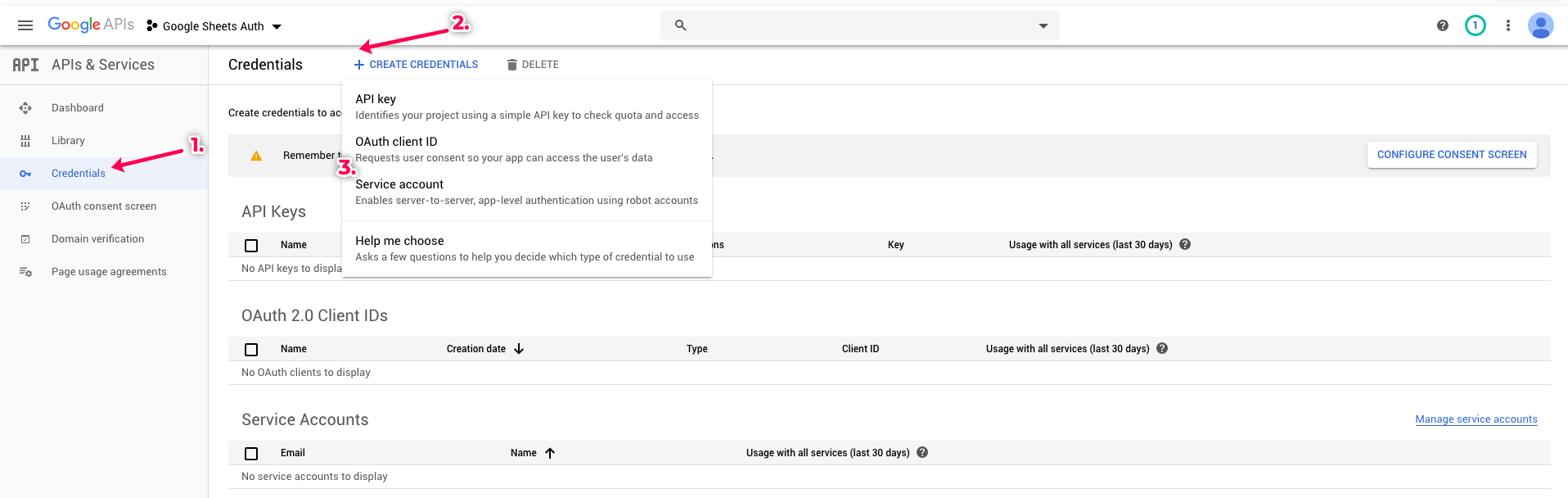

#1.3 - create a set of new credentials

#1.4 - select a service account

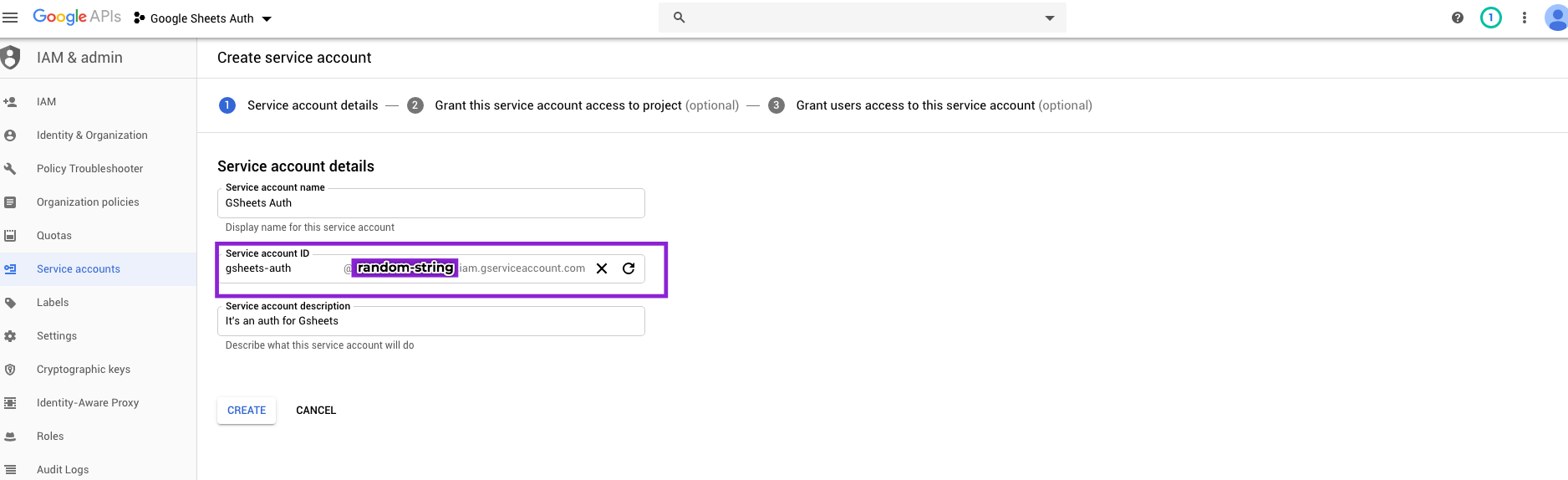

It will generate a Service account ID for you. This is the email you will share Sheets to so you can programmatically access them

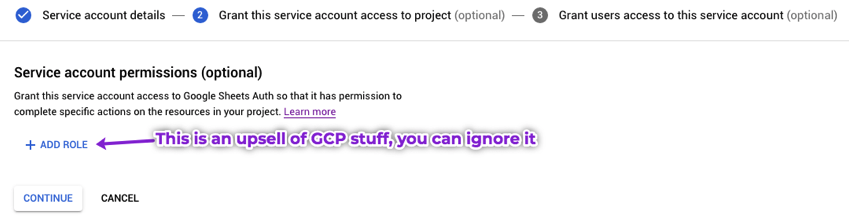

#1.5 - ignore permissions

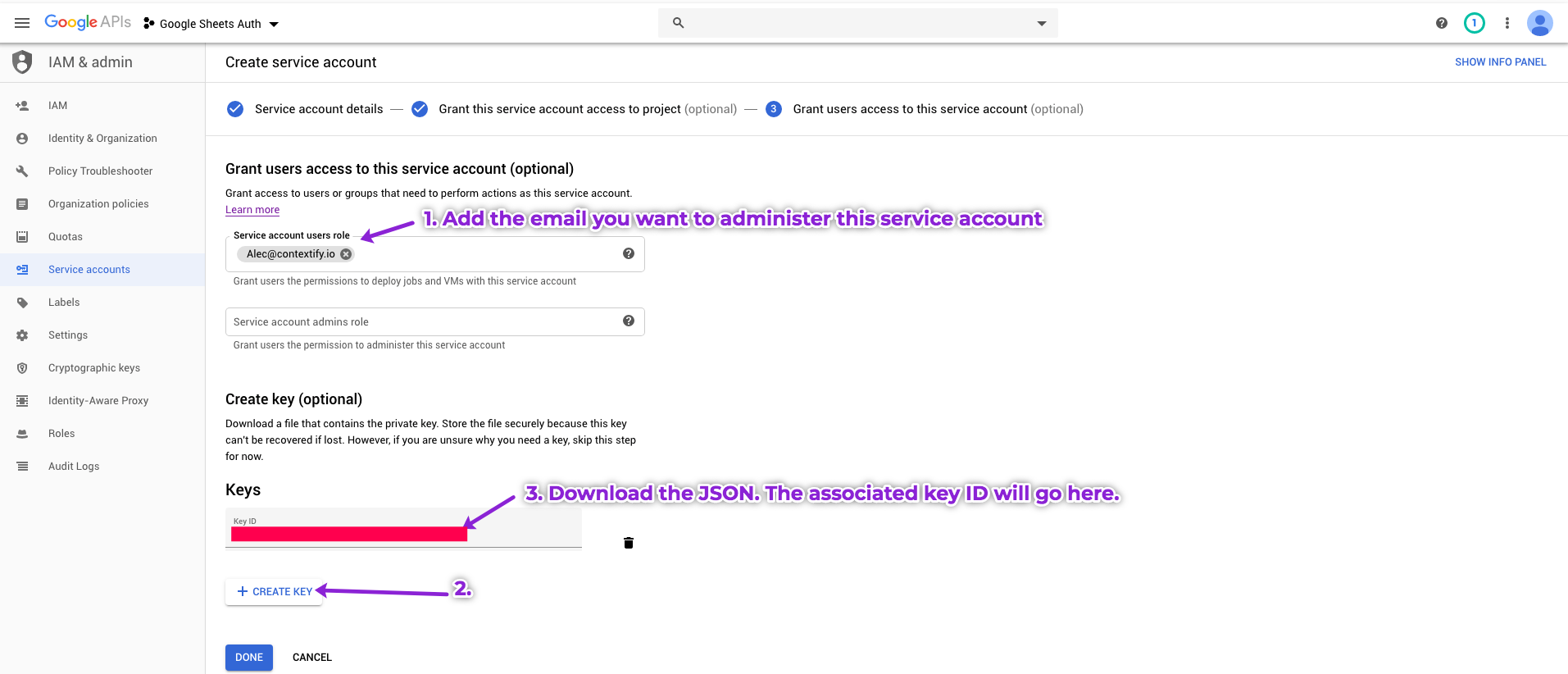

#1.6 - finish and download credentials

Double check the email you want to administer this service account is the same one you're logged in as. After you click CREATE KEY, it will generate a JSON string. I store mine in 1Pass.

#1.7 - but wait, you're not done yet

We still need to enable the Google Sheets API for our API Console project.

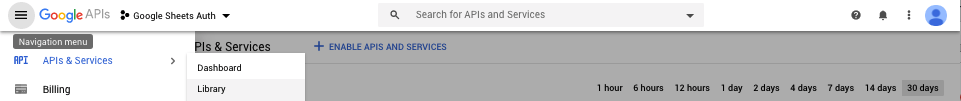

Let's quickly go to the Library

Search for Google Sheets -> Click Enable

If you want to enable gc.open() (opening Sheets by their name), which you generally do, you also need to go enable the Google Drive API.

#1.8 - make a given Sheet programmatically accessible

the service account we created above with the gsheest-auth@random-string.iam.gserviceaccount.com address has access to nothing to start. To make a Google Sheet accessible, share the Sheet with that address as if it were any other email.

#2 - auth

#2.1 - introducing GSpread (and pandas)

In the below examples, I'll be using GSpread, an open source wrapper of the Google Sheets API. Google, at times, recommends it over the API itself. I'll also be using Pandas for complicated writes. If your environment is not amenable to new packages, feel free to stop here.

#2.2 - auth code

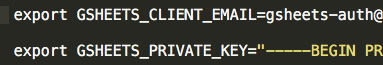

There are two ways for your code to auth into GSpread: a local JSON file or environment variables. If the latter, you'll want to fetch the private key and client email you got from the JSON in step 1.5.

The JSON file auth is a little easier:

import gspread

from google.oauth2.service_account import Credentials

def auth_gspread():

scopes = ['https://www.googleapis.com/auth/spreadsheets']

credentials = Credentials.from_service_account_file('/path/to/secret.json', scopes=scopes)

gc = gspread.authorize(credentials)

return gc

Alternately, let's say you're running Google Sheets reads/writes in AWS Lambda, like I am. You can create a dict from env vars and pass it to from_service_account_info

import os

import gspread

from google.oauth2.service_account import Credentials

from google.oauth2 import service_account

def auth_gspread():

auth = {

"private_key": os.environ["GSHEETS_PRIVATE_KEY"].replace("\\n", "\n").replace('"', ''),

"client_email": os.environ["GSHEETS_CLIENT_EMAIL"],

"token_uri": "https://oauth2.googleapis.com/token",

}

scopes = ['https://www.googleapis.com/auth/spreadsheets']

credentials = Credentials.from_service_account_info(auth, scopes=scopes)

gc = gspread.authorize(credentials)

return gc

Why not just generate a permanent API Key? Turns out they are only usable for public data

#2 - simple reads

access a worksheet (I'll refer to them as tab) on a Google Sheet:

def open_gsheet(sheet_name):

gc = auth_gspread()

if 'docs.google.com' in sheet_name:

sh = gc.open_by_url(sheet_name)

elif len(sheet_name) == 44:

sh = gc.open_by_key(sheet_name)

else:

sh = gc.open(sheet_name)

worksheet_list = [x.title for x in sh.worksheets()]

return sh, worksheet_list

def get_gsheet_tab(sh, tab_name, **kwargs):

if isinstance(tab_name, int): # index

tab = sh.get_worksheet(tab_name)

elif isinstance(tab_name, str):

tab = sh.worksheet(tab_name)

# Controls for weird bug where get_all_records() on an empty sheet will raise IndexError

try:

tab_lod = tab.get_all_records(default_blank=kwargs.get("default_blank", None))

except IndexError:

tab_lod = []

return tab, tab_lod

#2.3.1 - an example of reads:

sh, tab_names = open_gsheet("1tgTWvAKqX-qOABGtdAZIeJpjOEDro2iDGMS4O8z1fFA")

tab, tab_data_as_list_of_dicts = get_gsheet_tab(sh, "Sheet2")

#2.4 - other helpful methods

get all values in a given range(s) on Sheet2

tab_data_as_list_of_lists = tab.get('A1:B2')

# if you want to fetch multiple ranges at once:

tab_data_as_list_of_lists_of_lists = tab.batch_get(['A1:B2', 'C1'])

get all cells matching a string or regex:

cell_list = tab.findall("Rug store")

# or, for a regex:

criteria_re = re.compile(r'(Small|Room-tiering) rug')

cell_list_2 = tab.findall(criteria_re)

convert any of the above into a Pandas DataFrame

tab_df = pd.DataFrame(tab_data_as_list_of_dicts)

# or, for list_of_lists:

tab_df = pd.DataFrame.from_records(tab_data_as_list_of_lists)

final note: you will have problems with whole-tab reads if you have duplicate column headers in your tab.

#3 - simple writes

#3.1 - create a new tab

if wrapped in a function, you can provide defaults (here, 50 rows and 5 cols)

def create_gsheet_worksheet(sh, tab_name, **kwargs):

return sh.add_worksheet(

title=tab_name,

rows=kwargs.get("rows", "50"),

cols=kwargs.get("cols", "5")

)

#3.2 - simple range (over)write

for range overwrites, keep in mind only the provided new values (the second argument, the list of lists) will update. Any cells in the range (the first argument) that don't have according values won't be changed. In the below example, A2 becomes 42, A3 becomes 43, and A4 and B2:B4 are unchanged

tab.update('A2:B4', [[42], [43]])

# or, with a DataFrame:

tab.update([your_df.columns.values.tolist()] + your_df.values.tolist())

#3.3 - simple tab (over)write

(starts at A1)

tab.clear()

tab.update(your_list_of_lists)

# optionally, you can resize the tab

tab.resize(

rows=len(your_list_of_lists),

cols=len(your_list_of_lists[0])

)

#4 - complicated writes (it's DB time y'all)

The above sections can be helpful for pet projects, or for Sheets that are infrequently changed. But what if you wanted to support:

- overwrites (effectively a DROP + CREATE)

- appends

- append only new rows that have unique primary keys

- append only uniques and deduplicate existing rows

And conduct basic data hygiene and maintenance, like:

- periodically replicate to an external datalake

- dropping empty rows & columns

- deduplicating on a primary key

- rearranging and standardizing column ordering

All while nontechnical users can interface with it easily and versioning is provided free, out of the box.

Further, what if you wanted to add the ability to make those writes from anywhere? Like, for example, in response to a SQS message or a new CSV being added to an S3 bucket.

(the solution is too long to include here, but here's the link to the repo. You can clone the repo, deploy with serverless, and have Lambdas ready to go to make those operations)

git clone git@github.com:alecbw/GSpread-Advanced-Writes-www.alec.fyi.git

#4.1 repo overview

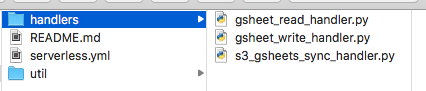

This repo contains a serverless.yml infrastructure-as-code file, which deploys 3 Lambdas:

- A GSheet Read Lambda (

gsheet_read_handler) - A GSheet Write Lambda (

gsheet_write_handler) - A GSheet -> S3 data sync cron service (

s3_gsheets_sync_handler)

and a S3 bucket:

* gsheet-backup-bucket-${env:AWS_ACCOUNT_ID}

#4.2 testing locally

sls invoke local -f gsheet-read -d '{"Gsheet":"1tgTWvAKqX-qOABGtdAZIeJpjOEDro2iDGMS4O8z1fFA", "Tab":"Sheet1"}'

sls invoke local -f gsheet-write -d '{"Gsheet":"GSpread Testing Sheet", "Tab":"Sheet1","Type":"Overwrite", "Data":[{"col1":"hello","col2":world},{"col1":232,"col2":"mixed type columns are OK"}]}'

# to use the sync, you'll need to set the GSheet ID and Tag as env vars

export GSHEET_ID=44charIDorURL

export GSHEET_TAB=Sheet2

export AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)

sls invoke local -f s3-sync

#6 - other

a full list of GSpread methods can be found here

#6.1 - rate limiting

This version of the Google Sheets API has a limit of 100 requests per 100 seconds per user (and total 500 requests per 100 seconds per project). Limits for reads and writes are tracked separately. There is no daily usage limit. You can easily hit this limit if you have a bunch of asynchronous workers trying to read and write in parallel.

#6.2 - working with Pandas DataFrames

converting to DataFrame

df = pd.read_json(data, orient="records") # JSON

df = pd.DataFrame(data) # list of dicts

df = pd.DataFrame.from_dict(data) # dict with list values

df = pd.DataFrame.from_records(data) # list of lists

df = pd.read_sql(sql, connection) # SQL from a DB

converting from DataFrame

data_lod = df.to_dict("records") # list of dicts

data_json_str = df.to_json() # JSON

data_lol = df.values.tolist() # list of lists

Thanks for reading. Questions or comments? 👉🏻 alec@contextify.io